Probability of occurence of a software failure

By Mitch on Friday, 28 September 2012, 12:10 - Misc - Permalink

In two previous articles, I talked about the differences of bugs, software failures, and risks.

I left the discussion unfinished about the probability of occurence of a software failure or a defect.

I think that assessing the probability of occurence of a software failure is a hot subject. I've already seen many contradictory comments on this subject. It's also a hot subject for software manufacturers that are not well used to risk assessment.

Risk: probability matters

We've seen in those previous articles that the criticity of a risk is strongly linked to:

- the gravity of the potential injury,

- the probability of occurence of the injury

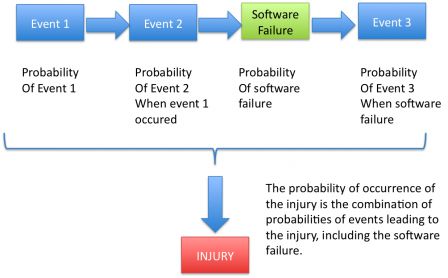

The probability of the injury is the combination of the probabilities of events that lead to the injury, including the software failure.

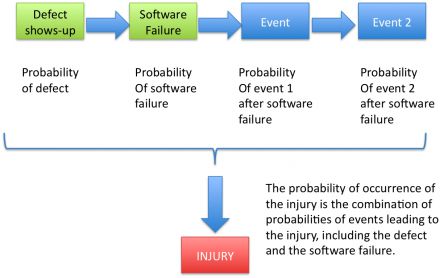

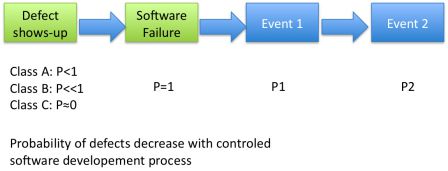

When the cause of the software failure is a defect, the diagrams changes to this:

Probability of software failure

In case of software failure that could lead to an injury, the probability of occurence of the injury is directly linked to the probability of software failure.

But it is extremely difficult to set the probability of occurence of software failures.

So many components are involved in software that casting the bases of a modeling with probabilistic computations is seriously compromised.

There are methods based on historical and statistical data, or even probabilistic calculations. But in general, they're not applicable to your case!

Zooming on the first diagram above, we miss a serious piece of the puzzle:

Probability of defects

It's all the more difficult to evaluate the probability of defects. Hence defects are human errors in coding and pitfalls in tests. With bugs, the only thing that we can say is:

- We've done our best to eliminate bugs, but some may still be hidden,

- We've done our best to prevent software failures for which the root cause is a bug, but perhaps there is a case that we haven't seen yet.

By the way, IEC 62304 standard helps us to assert this. This is the main purpose of the standard! Better software development process, deeper risks assessment, better software, less bugs, less software failures!

Controlled process or not, we can't know the probability of a defect. Like with software failures, we miss a master piece of the puzzle:

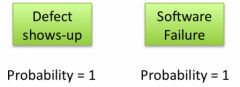

If you don't know, take the worst case

The situation is not desperate though. There is a very simple measure to take. If we don't know the probability, we should take the worst case. It means that a software failure will happen one day. Somewhere. Somehow.

Thus the probability of occurence of a software failure should be set to 1.

There is an interesting discussion about that in section 4.4.3 of IEC/TR 80002-1. I invite you to read it.

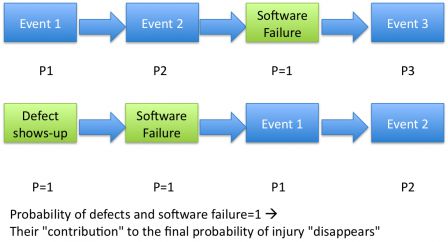

So our green boxes on the diagram have a probability of 1.

Probability = 1, also for risk???

It doesn't mean that the probability of risks where software is involved shall be set to 1. The final probability of a risk is the multiplication of:

- The probability of the root cause(s) of the software failure, and

- The probability of the software failure, when the root cause occurs, and

- The probablity of events after software failure.

Since the probability of the software is 1, the final probability is equal to the probability of the root causes and the events after failure.

Let me give you some examples.

A software reads data from a CD-ROM

I limit this example to one root cause and assume that there is not a chain of hazardous events to generate the hazardous situation.

- When the CD is burned, there is a probability P1 that the burning process generates errors, and that data are corrupted.

- When data are corrupted on the CD-ROM, the software may read wrong information or could't read them,

- When CD-ROM is corrupted, it's possible that the software:

- could read the corrupted data, but without any failure (the corrupted data are in a trailing block of unused bytes),

- could read the corrupted data with a failure, like corrupted values of pixels displayed in an image,

- could not read anything, the file structure of the CD-ROM is totally corrupted.

- Since I can't determine when it goes ok or when it goes wrong with my software, depending on what data is corrupted; I set the probability of software failure to 1.

So the probability of the risk is equal to the probability of burning a corrupted CD-ROM. P-risk = P1

A software fails to monitor a hardware

I give in this example a chain of two hazardous events which generate the hazardous situation.

- A software monitors a hardware through an analogic wire connection in a very perturbated environment,

- There are electomagnetic perturbations, that despite the EMC shielding, are likely to generate errors in the signal,

- The analogic signal is converted to digital data,

- The digital data may have been corrupted by these EMC perturbations, hence the converter could not convert anything,

- Corrupted or not, the digital data are received by the software,

- The software may fail to monitor the hardware (eg. loss of position of an engine, unable to measure a value of a sensor ...),

- Since I can't determine when it goes ok or when it goes wrong with my software, depending on what kind of EMC perturbation took place, I set the probability of software failure to 1.

So the probability of the risk is equal to the probability of two chained events:

- P1 = the probability of having strong EMC pertubations,

- P2 = the probability of the analogic-digital converter being unable to convert the perturbated analogic data.

A short probabilistic calculation gives: P-risk = P1 x P2

What is the risk linked to a defect in the software.

This kind of situation is very different form examples given above, because the root cause is a pitfall in the design of the medical device. In short, the root cause is the software development team which missed something. I can't be more specific, hence there are thousands of reasons to have a defect. IMHO, this is the most difficult situation to grasp.

Why is it so difficult to understand it? Because we said that the probability of a software failure is 1. So, there will be a defect in the software, somewhere and it will generate a software failure one day, somewhere, somehow.

IEC 62304 reduces the probability of defects

Don't look too far how to manage this kind of software failure. The main mitigation action of risks linked to software failures generated by defects is applying the IEC 62304 standard!

The purpose of the standard is to decrease as sharply as possible the probability of having a defect:

- Class C: defects are very unlikely,

- Class B: defects are unlikely,

- Class A: defects are occasional.

Even if we apply IEC 62304 from A to Z, like in class C, there is still a very low probability that a defect occurs.

Even if we apply IEC 62304 from A to Z, like in class C, there is still a very low probability that a defect occurs.

A software developed with class C contraints is very robust, but we can't assert it is perfect.

Acceptability of defects

That being said, it doesn't mean that a software failure linked to a defect, even with a low probability, is acceptable in a class C software.

What, if a defect generates a software failure that could lead to an extremely hazardous situation, like patient death? Though with extremely low probability, the consequence is extremely high and leads to an unacceptable risk.

In this case:

- either there is a hardware solution to avoid the extremely hazardous situation, to even more decrease the probability,

- or there is no solution. A software solution is not acceptable, since it might be also subject to defects (we're going in a circle).

Situation #1 is the most comfortable. The software risk is mitigated by something outside software.

In situation #2, the risk remains. The benefit/risk balance has to be assessed with medical experts, to determine if the risk is acceptable.

Conclusion

It's not possible to assess the probability of a software failure. So it has to be set to 1. Thus the probability of the risk is only assessed from the probabilities of the chain of events that generate the software failure and the events generated by the software failure.

In the case of software failures generated by defects, the probability of defects is unknown. The purpose of IEC 62304 is to decrease the probability with more strigent development methods according to the class of software.

Risks related to these software failures, however, will still be present. It's only the policy of the manufacturer about residual risks that can determine if the risk, versus benefits, is acceptable or not.

Comments

We had the exact same discussion in one of our projects the other day. I usually read new posts on your blog, but I must have missed this one...

btw. I always recommend your blog to people I work with. It's often easier to get them to read this instead of looking into IEC/ISO documents =)

Thanks for the article.

Where you wrote "The main mitigation action of risks linked to software failures generated by defects is applying the IEC 62304 standard," I was wondering if "Software developed using IEC 62304" would be documented as the risk control on the hazard analysis.

It seems like this would end up being a risk control for all software-related hazards because all the software would be written to IEC 62304.

Hi Hans,

You're right :-) That's the purpose of IEC 62034. But ISO 14971 is there to force you to find mitigation actions specific to your software with top priority for "safety by design".

Thank you for this informative post. But I think, in some places you calculated the total probability by multiplication (if the events be dependent, you have to calculate the total probability by conditional probability formulas).