Artificial Intelligence in Medical Devices - Part 2 Design

By Mitch on Friday, 1 August 2025, 14:41 - Processes - Permalink

After introducing this series in the previous post, we continue with MDAI design.

AI design is different from SW design. That's why IEC 62304 in its current version doesn't help for AI design. It requires a lot of imagination to interpret it for AI design. Especially for architectural design, detailed design, and matching unit tests and integration tests.

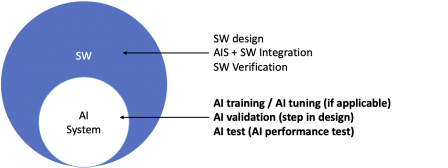

If we take the diagram from the previous post, we can see that AI design is a subset on MD design:

Obviously, if the MD is GUI-less and only made of an AI system, the AI design is almost all of the MD design. Some software items remain necessary to integrate the AI system in a usable medical device. Generally, a pipeline for interoperability of the AI system with other components internal or external to the MD. But, even with these software items around the AI System, the AI design is the task requiring most of the time in the whole software design.

AI design overview and steps

Specifications

The first step of AI design is common to any kind of software: software requirement specification.

In this step, we can rely on IEC 62304 clause 5.2.2 Software requirements content, like:

- AI functions and performance,

- AI inputs and outputs,

- etc.

Especially, AI performance specification may contain criteria, like sensitivity, specificity criteria.

These software specifications may already be expressed at product level. E.g.: it may be possible to know and define sensitivity early at product level. In this case, the software requirement will be simply a copy of the product requirement, allocated to the AI system.

Architecture

We continue then by designing an architecture of the pipeline containing the AI model, and the AI model itself.

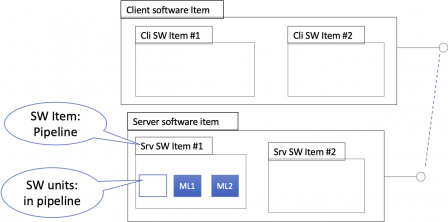

Here is a basic example of architecture, where the AI system is a pipeline on the server side, containing one or several AI models:

Borrowing IEC 62304 language, the AI model itself is a software unit, and the pipeline containing one or more AI models is a bigger software item.

Of course, your AI architecture can be different, with or without pipeline, with or without a foundation model, or something else totally different. The idea of the architecture is to be still able to pinpoint the AI system(s) in the software architecture.

During AI design, the architecture of the AI model itself may be made of several candidate architectures, with different model topologies, and pipeline steps. Only one will be selected, usually at the end of AI validation.

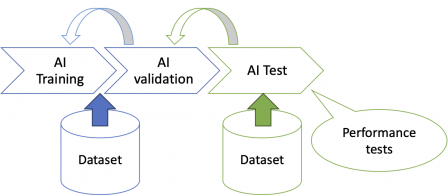

After architecture, we continue with the core of AI design. It is made of:

- AI training or tuning,

- AI validation,

- AI test.

For machine learning, these steps replace detailed design, and unit tests found in IEC 62304.

There can, and will, be iterations between these 3 phases, to obtain the best model, according to the specifications.

Like architecture, we can have different loss functions, or reward functions, and hyper-parameters tried during training iterations.

These steps can be seen as the detailed design step of a larger design process, with AI model integrated in a larger architecture.

Note: this is not fully true for the AI test phase. AI test phase is also named AI performance test. This can be seen as a step making a major contribution to the validation of the device itself.

Here is a more detailed description of AI training, validation and test:

AI training or tuning

Input:

- AI model specifications (it can be a subset of SRS).

- A list of candidate models designed by data scientists.

- Dataset for training.

- A list of hyper-parameters, a loss function or a list of possible loss functions, to be set by data scientists.

Activities:

- Train all (or most of all, if a model really outperforms predefined specification criteria) candidate data models with training dataset.

- From a process viewpoint, the training method can be any suitable method for the models. It can be documented in the software development plan.

Output:

- Trained models.

- Chosen loss function and hyper parameters set for optimal performance.

AI validation

Input:

- Trained models.

- AI validation plan with acceptance criteria, derived from AI model specifications.

Activities:

- Validate models with validation dataset.

- Model validation techniques will differ, depending on the type of model. The technique used for a given model is documented in the AI validation plan.

- Typical validation technique is cross-validation. These techniques will be described in the future IEC 63450 standard.

Output:

- One or more best trained model, and its (their) hyper-parameters and loss function(s),

- AI validation report.

AI test

Input:

- AI model Test plan with acceptance criteria, derived from AI model specifications,

- One or more best trained models,

- Test dataset, separated from training/validation dataset.

Activities

- Validate the performance of the best AI model with test dataset,

- The techniques to perform AI tests will be described in the future IEC 63521 standard.

Output

- Best model,

- AI model Test report.

We will discuss this AI test phase in a future article about MDAI verification and validation.

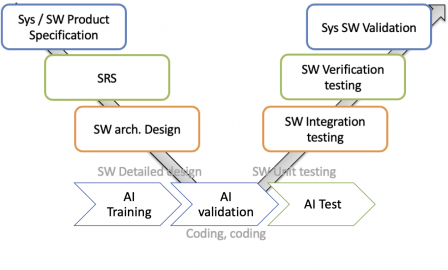

Integration in MD design

The three AI design steps above can be integrated in a broader MDSW design, according to IEC 62304 + (IEC 82304-1 SaMD or IEC 60601-1 electromedical device), we obtain a kind of big picture like this:

Classical software detailed design, coding and unit testing are replaced by the AI model design seen above.

AI design & development plan

IEC 62304 2nd edition will contain a clause on AI design and development plan. This new version is expected by mid 2026.

Provisions on AI design and development plan can be included in the software development plan. According to what we've seen above, it can include the steps specific to AI: training, validation and test. The description of these steps given above can be used as a bootstrap to write provisions in your own design and development plan.

And what about the datasets

We see here that almost everything stems from data. For each step, we need a dataset:

- Training and AI Validation dataset, usually split into 80% for training and 20% for AI validation,

- Test dataset.

Having the right datasets is of utmost importance, to reach the performance objectives defined in the SRS. Thus, data deserve their own data management process.

We'll see that in the next article.

Confiance AI Body of Knowledge

For readers wanting to go deeper into AI design subject, the Confiance AI foundation established what they call a Body Of Knowledge. It models the activities for the engineering of a critical ML-based system. Interesting for defining your own processes when you design a safety-critical software system or software + hardware system.