En route to Software Verification: one goal, many methods - part 3

By Mitch on Friday, 14 December 2012, 12:35 - Processes - Permalink

In my last post, I explained the benefits of static analysis. This software verification method is mainly relevant to find bugs in mission critical software. But it fits the need of bug-free software for less critical software as well.

Static analysis can be seen as an achievement in the implementation of software verification methods. Yet, other methods exists that fit very specific purposes.

Heavy Metal

In reference to my previous posts, I place the tests methods here in the Heavy Metal category!

Perhaps some may say these methods aren't so heavy metal. It depends on your experience with these methods, of course.

Automated GUI testing

Automated Graphical User Interface testing sounds like something very simple, but it is not. It requires a GUI testing engine and a lot of patience of people who feed the engine with tests.

The main impact of GUI testing is on the composition of the test team. The GUI tests will certainly be assigned to a (poor) guy, who will work 100% on this task. A good option is to let him/her do other types of testing and integration!

I personally haven't been satisfied yet by any GUI testing tool I've found either open-source, or commercial. If you can quote me one that you're happy with, you're welcome!

Performance testing

Testing performance is less specific than testing GUI because it involves the whole architecture of a product, not only its GUI. As a consequence it involves the whole testing team (this is my experience, maybe not yours).

Here again, a lot of tools exist to do performance tests. Most of them are focused on Web apps or databases with concerns about load balancing and load increase.

This is probably not relevant for embarked software. Tailor-made testing programs are better to test a specific issue on such software.

Security testing

Static analysis tools can find security holes in your code, like buffer overrun. Some other security tests are possibly necessary for your devices. Perhaps it's a good idea to ask consultants in IT security to find security holes in your devices.

Embarked software with wireless connection (even used very occasionally, like only in maintenance) fall into the scope of this kind of tests.

Statistical methods

Statistical methods are made to test complex algorithms. It is not possible to test all combinations of input values in complex algorithms. One way to increase the level of confidence in an algorithm is to use such methods.

Most of algorithms are based on physics/maths laws and can be tested to ensure that the law is verified by the algorithm.

Statistical tests call for techniques like Monte Carlo simulations or khi 2 tests, to name a few. Although I don't have experience in statistics, I had once to use a Monte Carlo simulation engine. It was a commercial add-on to excel, which was really nice to use.

The Big Picture

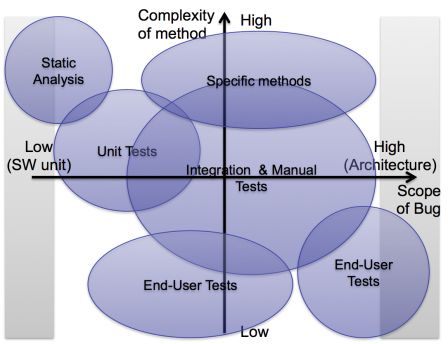

To finish this series of posts, here is a diagram, which contains the position of the different verification methods we've seen. I tried to place them according to their complexity and the type of bugs found.

You may certainly change the size and the position of the ellipses, given your experience and the kind of medical devices your work on. And I don't include clinical trials in end-user tests done for software verification, as discussed in this article.

The most important point on this diagram is the projection of the ellipses on the x-axis. The union of the projections of all ellipses shall cover all the x-axis. i.e. all types of bugs shall be sought by these methods:

- High-level: uses-cases and architecture,

- Mid-level: algorithms and components

- Low-level: language pitfalls, coding rules and software units.

We have a zone at both extremities of the axis (grey-shadowed) where only one method is able to find bugs efficiently:

- For very high-level bugs, only end-users can find them,

- For very low-level bugs, only static analysis can find them.

When these kind of bugs are found on the field, after the software validation:

- Very high-level bugs are discrepancies between the result given by software and the result expected by the user, that require medical knowledge to be found and analyzed,

- Very low-level bug are any kind of error in code, that can lead to an erratic and non-reproductible behavior or simply a crash.

Only a fully controlled software development process is able to whip out all bugs including those at the extremities of the diagram.

He forgot code reviews!

Some may say that I didn't mention code reviews by peers or code inspections (like Fagan analysis, as mentioned in a comment of this post). This is a kind of verification method, but no alive software is involved:

- neither the software is running,

- nor a test tool is running,

- only developers can do it.

People only need a sheet of paper or a text editor to do code reviews. Code reviews are made by developers, not testers. That's why I didn't put them in the scope of this series.

One could argue that unit tests are made by developers. Yes, it's true. But units tests may be run by a tester or a build manager, without the help of any developer.

That's why I don't include code reviews in software verification, as well as requirements reviews or achitecture reviews, for example.

Conclusion

I tried to make an overview of software verification methods that exist and how complementary they are. There are probably other methods that are used by software companies or computer science labs. But I think that I have covered 90%-95% of all methods. If you know some others, feel free to quote them in comments!